Oracle “Physical I/O” ? not always physical

A customer called and wanted to know why the development database was so much slower than production when both databases were on the same type of machine and same type of storage.

To analyze the situation, the same query was run on both databases with

alter session set events '10046 trace name context forever, level 12';

and sure enough, development (SID=dev) showed average I/O almost twice as slow as production:

db file sequential read

db Times Waited Elapsed (ms) Avg Ela (ms)

~~~~ ~~~~~~~~~~~~ ~~~~~~~~~~~~ ~~~~~~~~~~~~

dev 55528 479930 9

prod 65275 294785 5

Now the above is physical I/O as reported by Oracle from looking at the wait event “db file sequential read” in the trace file. Based upon the above data alone, it would look like the “dev” database had an I/O subsystem problem. On the other hand not all “physical I/O” as reported by Oracle is really physical I/O. The I/O reported by Oracle could simple be I/O that is satisfied by the O/S file system cache. When Oracle issues a request for I/O, in this case a “db file sequential read” (aka a single block read request) all we know is Oracle is asking the operating system for the data. We don’t know where the operating system gets the data. The operating system might issue a request for the data from the underlying storage system or it might find the block in the operating system file cache. How can we tell the difference between a read from the underlying storage or a read from the O/S file system cache? Well, from the Oracle perspective there are no statistics that differentiate between the two, but based upon the latency for the I/O request we can make an educated guess. Oracle keeps I/O latency information in the v$event_histogram views, but unfortunately these views only go down to the granularity of 1ms. For I/O from a SAN that is cached in memory on the SAN, the latency could go down to as fast as 10s of micro-seconds. What we want to know is how much I/O is coming faster than a SAN could reasonably supply the data. An 8K data transfer over 4Gb FC takes 20us for the data transfer alone not to mention any O/S scheduling and or code path. Thus a reasonable value for I/O that is faster than a SAN would/could supply would be on the order of of 10s of mircro-seconds. For example 10us is faster than even a 8Gb FC could supply 8k. Now add on some overhead for code path, scheduling etc and 100us (ie 100 microseconds) is a pretty good cut off.

Taking the same trace files used for the above analysis and grouping the I/O requests into latency buckets gives:

db : 10u 50u .1u .2m .5m 1ms 2ms 4ms 8ms 16ms 32m 64m .1 .5 >.5 dev : 1 14 908 13238 6900 9197 15603 9056 265 26 12 12 prod:4419 2713 391 118 22189 2794 1688 2003 11969 14877 2003 105 3 3

What we see is that production gets substantial portion of it’s I/O in less than 10us, ie this data is coming from the file system cache.

In this case the query had only been run once on “dev” but had been run multiple times on prod, thus on prod, the O/S had cached some of this data in the file system cache.

Once the query was run a couple of times on “dev”, then the latency on “dev” when down to the latency on “prod”.

To avoid reading from the O/S cache all together (or for the most part), one can set

filesytemio_options=SETALL

but by doing this alone, it would just increase the latency of the I/O as the O/S file system cache would no longer be used. In order to compensate for the loss of the O/S file system caching, then the buffer cache for the Oracle database should be increased in order to compensate for the loss. If the buffer cache is increased sufficiently to compensate for the lost O/S caching memory, the there will be less CPU used and less I/O and less latency due to I/O calls by Oracle. On the other hand if there are several database on the host then balancing the SGA sizing among the databases without use of O/S file system caching can be more trouble than it’s worth and using O/S file system caching might be more flexible.

How to extract a histogram of I/O latency from the Oracle trace file? Run the script

parsetrc.pl

The script is on github, so feel free to fork the code and make your own changes.

To run, just type

./parsetrc.pl sid_ora_29908.trc

where “sid_ora_29908.trc” is the name of your trace file.

The output looks like:

----------------------------------------------------------------------------

Time Breakdown (seconds)

----------------------------------------------------------------------------

elapsed 3690

total wait 1525

cpu 1327

unaccounted 837

idle 0

----------------------------------------------------------------------------

Summary of Activity in trace file

----------------------------------------------------------------------------

event count total secs avg ms

1) ELAPSED 3690

2) CPU 1327

3) db file sequential read 243910 964 3.955

4) local write wait 34485 175 5.095

5) db file scattered read 25147 140 5.598

6) log buffer space 326 118 362.508

7) free buffer waits 164 64 394.329

8) write complete waits 4 29 7394.890

----------------------------------------------------------------------------

Histogram of latencies for:

db file sequential read

----------------------------------------------------------------------------

64u .1m .2m .5m 1m 2m 4m 8m 16m 33m 65m .1s .1s+

109572 782 118 345 10652 21701 15502 43635 34636 5270 1300 258 139

----------------------------------------------------------------------------

Histogram of latencies by I/O size in # of blocks for:

db file scattered read

direct path read

direct path read temp

----------------------------------------------------------------------------

64u .1m .2m .5m 1m 2m 4m 8m 16m 33m 65m .1s .1s+

db file scattered read

4 46 3 1 0 2 2 11 1 4 2 1 0 0

32 0 0 3436 14534 617 342 1542 852 834 642 361 295 186

The part of interest is “Histogram of latencies for: ”

The first histogram section is for “db file sequential read”, ie single block reads.

The next histogram section is for multiblock reads. These multiblock reads are grouped by read type and for each read type the I/O is broken down by I/O size in number of blocks. Number of blocks is the first field.

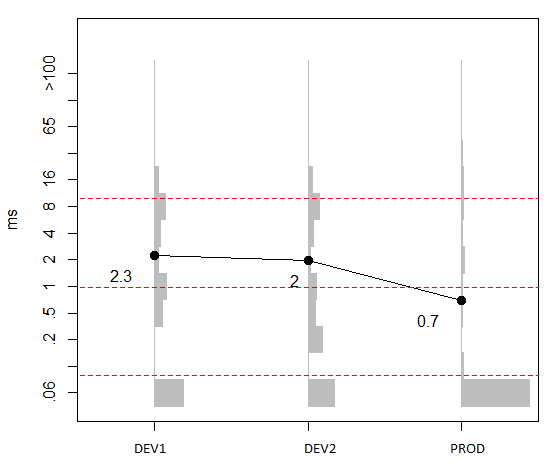

Here is an example of plotting using R the average I/O latency on three databases. The gray bars are histogram buckets of the count of I/Os of this latency range. It’s clear to see that the fastest average I/O latency of “PROD” at 0.7ms has most of the I/O coming from file system cache ie under 0.100ms.

Trackbacks

Comments

Nice stuff, it makes me think that I have to look into this R thing, it might save me hours of painfull discussions with my system admin…gosh I was one of them before, now I can’t suffer them any more…:)

Hi Kyle

nice article, thanks. I want to add a link to OraSRP which can produce similar histogram output. Here’s a text example (html is the default with a graph):

[sourcecode]

db file sequential read

———————–

value ————- Distribution ————- count

0.000004s | 0

0.000008s | 15

0.000016s |@@@ 113

0.000032s |@@@@@@@ 204

0.000064s | 6

0.000128s | 26

0.000256s |@@@@@@@@@@@@@ 387

0.000512s |@@@ 96

0.001024s |@ 45

0.002048s | 10

0.004096s | 27

0.008192s |@@@@ 132

0.016384s |@ 54

0.032768s | 10

0.065536s | 5

0.131072s | 1

0.262144s | 0

[/sourcecode]

@Timor,

Thanks for the note on OraSRP. I hadn’t realized that gave histograms now. Here is a list of 10046 parsing tools out there: http://ba6.us/node/177

Cary’s Method-R tools are the best by far but of course they are pay for tools.

OraSRP looks fantastic. I haven’t used it in ages. One thing I liked about OraSRP was trying to distinguish idle SQL*Net message from client waits from SQL*Net message from client waits that occur between fetches and are a more clean indicator of some application issue. Unfortunately OraSRP is only available on some platforms and the source isn’t available.

I like having scripts because it’s nice to be able to modify and with scripts the should/could run anywhere.

– Kyle

Looking at OraSRP from

http://www.oracledba.ru/orasrp/sample3.html

it looks like the current sections are:

Summary (dbid,node,sid,vers,#SQL[#user,#recur],#trx[#rollback,#readonly]

Session Flat Profile (Top Event breakdown)

Top 5 Statements per Event ( for each event list the top sql for that event)

Session Call Graph ( graph of recursive SQL)

Events Histograms

SQL Statements

Statement Cumulative Statistics

Statement Self Statistics

Statement Flat Profile (top events)

Statement Plan

Statement Binds Statistics

Good stuff.

Session call graph is nice as is organizing Top SQL for each top EVENT.

The example http://www.oracledba.ru/orasrp/sample2.html

shows I/O latency by I/O size which is nice.

The event histograms and the latency by I/O size are the two main things I was addressing parsetrc.pl. Some other things that are in parsetrc.pl that are still being polished are MB per second, max and min I/O throughput of any single I/O grouped by second broken out by I/O type – sequential, scattered, direct path, direct path temp.

Also I/O rates per minute.

Also putting together are compact table of SQL with just the SQL_ID the PLAN HASH and elapsed, cpu, wait, unaccounted for, ordered by elapsed as well as CURSOR_ID (the ID used in the trace file)

Looks like I have another entry for my page :) I seem to get a lot of hits on my 10046 tool page so I gave it a pretty alias and will try to keep it up to date. http://ba6.us/oracle_sql_trace_analysis_tool_list

I am in the middle of the same sort of investigation but unfortunately my Prod is much slower than Dev… even with the same cloned data behind them. I have traces collected and was going to make my way through the list… Thinking I will try your script and OraSRP first.

And thanks for putting your stuff out there on GitHub. I just started shovel… errr moving some of my old code out there as well. I have some Java 10046 parsing code that I was working on that I need to tidy up and make available. Until I can get my company to spring for MethodR tools I will have to rely on my own code and the kindness of folks that release their code into the wild.

Hi David,

Thanks for adding parsetrc.pl to your page on trace parsers and thanks for the page in general. I’ve found it helpful.

RE: github – yeah, github looks awesome. Actually it looks so nice it’s hard to believe. Looking forward to using it more. Also https://gist.github.com looks nice for sharing code in URLs and blogs

– Kyle

Hi Kyle- Thanks for sharing, you have mentioned “How can we tell the difference between a read from the underlying storage or a read from the O/S file system cache? ”

Does that (file system cache ) apply even when we use ASM ? Please clarify