I don’t consider myself a programmer and my exposure to some of these languages is quite superficial, so please feel encouraged to jump into this discussion with your experiences, corrections and comments.

What language and UI would you use to create an Oracle monitor? Why?

What are the pros and cons ? I want a tool to be

- easy to install

- quick to program

- have interactive graphics

- run fast

- web enabled

These goals are hard, if not impossible, to achieve in the same product. A product’s choice of programming language will be limited if interactive graphics are involved. I think graphics are the only way to make a tool easy to use and information easy to grasp but graphics definitely limits the coding options. A really nice option, and practically a commercial requirement is to run in a web browser and running in a browser even further limits the the coding options and and the possibilities of achieving all the goals

What programming languages could be used? Some choices are

- C

- SQL*Plus

- PL/SQL

- perl

- python

- tcl/tk

- ruby(on rails)

- Java fat client

- Java web app (with Flex)

- Delphi

- Visual Basic

- Apex

And tangential languages

- ksh(bash/sh/csh) with AWK

- HTML5

Here is a quick (5 minute off the cuff ) impression of the languages

Do they require an install? or can the be run immediately with simple executable?

Are interactive graphics possible (within reason)

Are they easy or difficult to program in?

Do they have cross platform support? (*nix, mac, win)

Are they web enabled?

Are the fast or are they memory (and CPU) hogs?

Do you have to pay for the development platform?

Installation:

To have an absolutely brain dead install would require the tool to be written in C, Delphi or VB as these are compiled into single executables. All the other languages, AFAIK, require the language interpreter or engine to be installed and generally require Oracle libraries to be there. Actually, C, Delphi and VB might require Oracle libraries as well, but at least for C, it seems like there would be a way to link a static executable. Oracle is not required for most Java applications as they are generally shipped with a JDBC driver for Oracle, though it’s just another file to scatter about on your file system as part of a complicated install. On the other hand Embarcadero has a neat option where everything, even in a java app, is packaged into one executable called an “instant on” executable, much more akin to running a self contained executable on UNIX via some windows magic in the sauce.

The installs required by other products vary. I’d say most are pretty easy, though how easy depends largely on how the language environment is packaged along with the tool. If the tool does no prepackaging and lets the user set up the required packages then installation can be a nightmare. A tool like ASHMON written in TCL/TK for examples requires graphing package, thread package, math extension and an Oracle connection package. I’ve packaged all these up into a single download which still requires the Oracle client libraries to be pre-installed. All and all it takes a good bit of upkeep to maintain the binaries of all packages on the platforms people might use and even upon installation it doesn’t have single executable, but an execution shell that has to launch and find the scripts which depend upon directory locations and it has to find the Oracle install and tnsname.ora. It would be so awesome if I could just make one executable with everything. So installation for Perl, Python and ruby would be similar, AFAIK. A java app is a bit better – one executable though it depends on java already being installed, and for APEX – I believe all sorts of stuff has to be installed and configured on an Oracle database and either installing a full Oracle database for the tool or requiring the target database to have APEX installed is a lot to ask for.

The software that is most likely to be installed is SQL*Plus but even SQL*Plus may not always be available on a customers laptop or desktop. My laptop did not have SQL*Plus until yesterday, almost 2 months after I started using the laptop. I was just using SSH and other tools. Yesterday I finally installed SQL*Plus to start working on a new performance monitoring tool. Why a new tool? I want a new tool that will give me performance data from customer’s Oracle databases that I can review at a later point in time. I want a tool that the customer can run as quickly and easily as possible and that requires the least amount of user knowledge and interaction. Beyond the goal of collection performance data, I would also want the tool to give some immediate feedback on performance. Of course SQL*Plus should be the easiest thing to use. Maybe the laptop or desktop does not have SQL*Plus installed, but in the realm of Oracle performance, nothing should be more reasonable than expecting the customer to have SQL*Plus installed on the desktop/laptop.

Graphics

AFAIK, the only reasonable interactive graphics options are Java Applications, Java in the web using FLEX (SGV was an option but is been shelved), TCL/TK, Ruby with RMagick, Visual Basic and Delphi. Not such luck for SQL, PLSQL, Perl or Python.

Apex may have some, but not sure how robust they are.

Java has gobs, but how good they are, not so sure and how do you find the right one? Many are pay for and I’d like to first commit to Java before paying and I want to be sure the package I’m buying is the best. Ideally I’d rather not have to pay

TCL’s BLT package has a quite good graphing library.

For graphs, there are lots of small but important options – auto resize, filled area stacking, log scales, mixing bars and lines, easily adding and deleting items from the graph, actions on graph contents triggered by mouse passing over or clicking, etc. Sure, graphing can be done in almost anything, but having a package to take care of all the minutia is the only kind really worth my time. TCL’s BTL graphs also use “vectors” or very efficient lists of numbers. I can act on these lists – divided two lists, add to lists, multiply a list by a value or modify points, add points, delete points and have the graph updated immediately.

Delphi and Visual Basic have graphic packages though, like JAVA, there are lots of options and many are pay for.

Ruby seems to have Rmagick. Not sure if there are other options.

Java web applications have Flex which requires Adobe’s IDE.

Data Manipulation

SQL and PL/SQL of course are pretty simple – at least they provide the ability to collect and manipulate data in a far more powerful and easy way than any other language. The con with SQL of course is it’s not procedure. PLSQL is of course procedural but often leads to creating things in the database like global temporary tables, packages and procedures and creating things in the target database is a “no no” for me. I want to affect as little as possible the database I’m monitoring. The best approach is to collect the data via SQL or even PLSQL with as little manipulation and then manipulate the data on a local, not target database. I’ve done this in the past with AWK but it’s tedious and brittle. Similarly I’ve manipulated the data in TCL and again, though clearer than AWK it’s still quite tedious. I’ve tried it in JAVA and JAVA just gives me the hebee jebees – it might not be as tedious, but I just get the feeling that the data structures are inefficient, non-scalable, slow and memory intensive. Another maybe better alternative is using a locals database but this requires installing a databases which is a bit overkill at least if the database is Oracle. The solution to this is to use an embeddable database like HSQL, H2, SQLite or firebird (is Derby embeddable?) For other languages we could also manipulate the data in local structures or objects but tacking aggregations, groupings, sums, averages, counts etc is just so tedious in code and so easy and effortless in a database. I assume it’s pretty easy to embed a databases in almost all the languages except maybe C because then I’d assume we’d be loosing the advantage of having C be an easy to install executable, though maybe it’s easy.

I started to play with SQLite and TCL a several years ago and though it may be simple it was certainly taking me more time than just interfacing TCL with a running Oracle database.

Beyond whether to use a database or not and how, there is the question of how productive is a coder in a language. The lower level the language the more tedious. For example C, though elegant and fast, is quite tedious. At the other end is something like Delphi which is a piece of cake. Another aspect is just how heavy the language is. I find Java heavy. The overhead to set up simple functionality feels simply crippling to me.

UI layout

Delphi is easy to layout UI.

TCL/TK is difficult.

I think Java is somewhere in between

For SQL the only UI is just hacking with ASCII – generally not pretty.

Ruby – I don’t know.

Flex – not sure either though I’d guess its good though no where near as easy as Delphi.

VB – I assume it’s like Delphi.

Perl and Python – not much options unless they are married to something like TK.

Apex – don’t know, I’ve head good things but it is Oracle and from what I’ve seen of APEX it’s not a cakewalk, at least to get up and running and ready. Maybe when all the pieces are in place its straight forward.

Coding

Java IMO seems like crap – it’s like “new money” , you know those LA mansions that are gaudy fake, expensive and needlessly huge.

Perl is not pretty but at leasts it’s efficient. You don’t get any LA mansions in Perl. It’s more like a souped up jalopy. It’s sort of junky but it runs fast.

Python seems good to me.

Ruby seems like a cross between TCL and Python – seems nice – sort of zen, though I’ve only written a few lines.

TCL – little strange, but ok – might have been nice but it’s been long forgotten.

Delphi uses a modified Pascal and what’s easier than Pascal?

Performance

Java is a pig.

Flex is a pig.

OK, OK don’t tell me that it’s not the language its the programmer. I’ve never seen a C program take up 600Ms to collect a few K of data and I’ve never seen a Java program run under 50Ms. (more like 100M+)

Delphi is like 10M, runs fast. What’s not to like? (pay for IDE and it’s washed up, the UI looks like so 20 years ago)

C of course is lightning fast.

TCL seems fine, maybe at bit slow, but for the structures I care about like vectors and in graphs have been well optimized

Python seems fast.

Perl – I assume it’s pretty fast. I tried to write a direct memory access program in Perl and it was slower than executing SQL but that’s because the API attached and detached from the Oracle SGA for every bit of data I collected. I theoretically could have gone in and modified the Perl API for shared memory.

Web

Who works on the web for a GUI? Apex, Java with Flex, Ruby on Rails with Rmagick. Rmagick may or may not provide GUI interaction – I have yet to try it. I spent a couple hours last weekend trying to get it to work under Cygwin and eventually gave up and just installed it straight under windows, but have yet to play with it.

Java with Flex is probably the premier solution but it’s fat and the IDE is payfor.

What about HTML5?

Is FLEX an option? maybe for an enterprise application but not for a desktop web enabled application as far as I can tell.

My Story

I’ve been thinking about this for years. I started programming TCL/TK graphics back in 1994. The only other option before 2000 or so was Visual Basic and Delphi . I didn’t hear of Delphi till about 2007 (!) and Visual Basic never seemed like an option since it was limited to Windows. The windows limitation seems much less important now days, but back then I only worked on UNIX and didn’t have a laptop, so TCL/TK was the only option until around 2000 when Java became an option. In 2002 when I started working on OEM 10gR1. When I arrived at Oracle in 2002 to work on OEM, I had no idea it was web based and pure HTML. HTML ?! I had thought that I would be working on the 9i java fat client. The 9i java client was relatively good at least compared to OEM 7 and 8 and I thought with a little facelift the 9i client could be good. I could hardly believe the 10g OEM was HTML. Pure HTML ? No interactive graphics?! and this was the monitor that was suppose to performance shoot, monitor and tune the some of the most important production databases in the world? Are you kidding me? The “solution” back pre-10gR1 was to have use gifs for any graphics. Any change in the gifs causes not a partial page refresh but a full page refresh. and these gifs would only be updated occasionally. The refresh rate on the Oracle home page could be as much as an hour out of date. Wow.

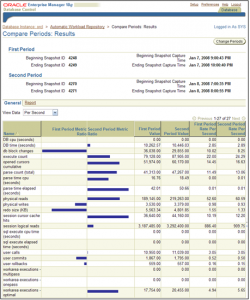

Well we change things – got SVG in, got the refresh rate to be much faster, but OEM is still slow and the data collection has hardly improved in the last 6 years. So much more could be done with some small changes, for example adding the average wait times for different kind of IOs, harnessing the wait histograms better, high lighting some key statistics , trending, forecasting, showing the differences between selected periods clearly, adding more drill down and aggregation options on the ASH data and externalizing in a clear graphical way the new extended row course data in ASH. I had want to get Adobe Flash/Flex into OEM but Oracle was reluctant. The compromise was SVG. OEM does use Flex but I’ve heard that Flex is going to be ripped out in lieu of ADF, and what’s even more twisted is ADF builds it’s graphic components upon Flex. Strange.

In all cases OEM is slow and huge and slow to change. Java, whether it’s in the middle tier or a Java application is memory pig and generally slow.

Summary

- I want the tool to run fast which means using as little CPU and memory as possible.

- I want a tool that’s fast to install.

- I want a tool that has interactive graphics.

- I want a tool that is easy to create and program.

- I want the tool to be web enabled.

For collection, the best sounds like using SQL*Plus with anonymous PLSQL – this could possibly be wrapped in C for a single collection executable.

For aggregation, anything works as long as it has a database.

For UI Delphi sounds like the easiest to program *and* run (possibly VB – anyone know the pros and cons of VB verses Delphi?)

For web – it’s either JAVA and FLEX and a memory pig and a pay for IDE or is RUBY on rails a possibility? Ruby has an Oracle , SQLite and UI packages.

What about HTML5 ? Too new? Does it have Oracle access? does it have an embeddable database? What graphic options does it have?

Kyle Hailey Uncategorized

recent comments