Envisioning NFS performance

What happens with I/O requests over NFS and more specifically with Oracle? How does NFS affect performance and what things can be done to improve performance?

I hardly consider myself and expert on the subject, but I have yet to find a good clear targeted description of NFS and especially NFS with Oracle on the net. My lack of knowledge could be a good thing and bad thing. A bad thing because I don’t have all the answers but a good thing because I’ll talk more to the average guy and make less assumptions. At least that’s the goal.

This blog is intended as the start of a number of blogs entries on NFS.

What happens at the TCP layer when I request with dd an 8K chunk of data off an NFS mounted file system?

Here is one example:

I do a

dd if=/dev/zero of=foo bs=8k count=1

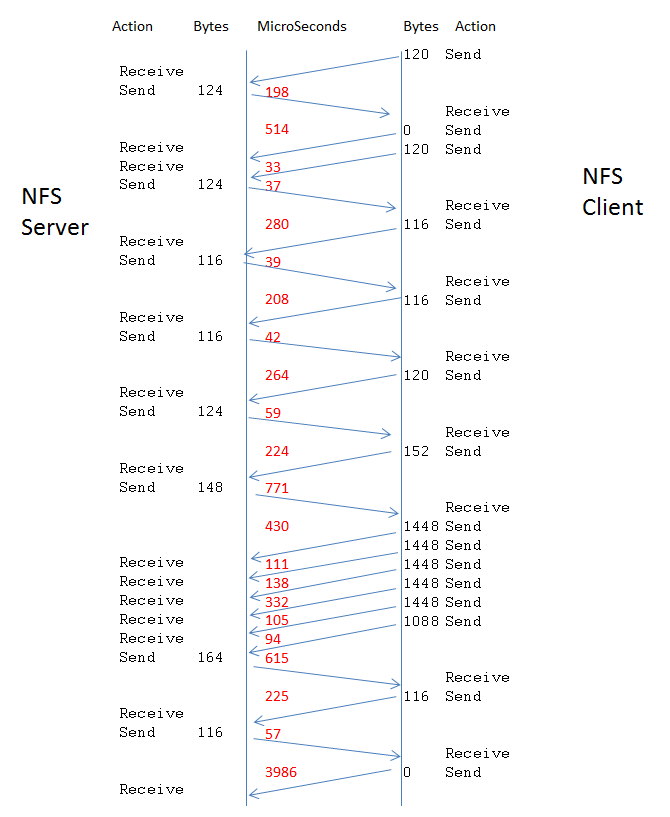

where my output file is on an NFS mount, I see the TCP send and receives from NFS server to client as:

(the code is in dtrace and runs on the server side, see tcp.d for the code)

There is a lot of activity in this simple request for 8K. What is all the communication? Frankly at this point, I’m not sure. I haven’t looked at the contents of the packets but I’d guess some of it has to do with getting file attributes. Maybe we’ll go into those details in future postings.

For now what I’m interested in is throughput and latency and for the latency, figuring out where the time is being spent.

I most interested in latency. I’m interested in what happens to a query’s response time when it reads I/O off of NFS as opposed to the same disks without NFS. Most NFS blogs seem to address throughput.

Before we jump into the actually network stack and OS operations latencies, let’s look at the physics of the data transfer.

If we are on a 1Ge we can do about 122MB/s, thus

122 MB/s

122 KB/ms

12 KB per 0.1ms (ie 100us)

12 us ( 0.012ms) to transfer a 1500 byte network packet (ie MTU or maximum transfer unit)

a 1500 byte transfer has IP framing and only transfers 1448 bytes of actual data

so an 8K block from Oracle will take 5.7 packets which rounds off to 6 packets

Each packet takes 12us, so 6 packets for 8K takes 76us (interesting to note this part of the transfer goes down to 7.6us on 10Ge – if all worked perfectly )

Now a well tuned 8K transfer takes about 350us (from testing, more on this later) , so where is the other ~ 274 us come from?

Well if I look at the above diagram, the total transfer time takes 4776 us ( or 4.7ms) from start to finish, but this transfer does a lot of set up.

The actual 8K transfer (5 x 1448 byte packets plus the 1088 byte packet ) takes 780 us or about twice as long as optimal.

Where is the time being spent?

Trackbacks

Comments

Hi Kyle,

Did you consider to use Jumbo Frames (http://en.wikipedia.org/wiki/Jumbo_frame) in your tests. I’m not a network expert but 8kB block should fit into 1 frame so it will be only one round-trip for block payload.

regards,

Marcin

Yes, planning on going into Jumbo Frames in the future posts.

The first thing I want to address is instrumentation and what the breakdown of the full stack is like.

I have only done minimal testing on jumbo frames so far – no extensive performance tests, but for one jumbo frames require that all the players in the communication, ie both machines and all the switches and or routeres support the jumbo frame. If not, then the communication can just hang without any error. I have had that happen. Feedback that I’ve gotten from people who should know, seem to say jumbo frames is a minor boost, but I do plan to go into that in detail, but what I want first is the tools to clearly measure the impact first.

Hi Kyle,

Thanks for putting this in visual order.

1Ge we can do about 122MB/s – Is that read MBPS or write MBPS or does not matter?

@Vishal

Great question – I was just wondering about that myself this morning. Typical 1Ge are “fully Duplex” meaning that they can transmit on one channel and receive on the other. Each channel has a theoretical max of around 122Mb each way so the total aggregate throughput would be over 200MBs but in a single direction it would only be ~100MB

https://learningnetwork.cisco.com/message/10572#10572

https://learningnetwork.cisco.com/thread/4094

Very good explanation Kyle. I guess, Server need not to confess all the blocks it needs to address. May be you might wanted to check Cache Management piece for fine tune.

Thank you

-Sreedhar