NFS versus dNFS

Nice write up on SLOB by Yury Velikanov at Pythian: http://www.pythian.com/news/33299/my-slob-io-testing-index/

I ran into the same issues Pythian came up with

- turn off default AWR

-

- dbms_workload_repository.MODIFY_SNAPSHOT_SETTINGS(51120000, 51120000, 100, null)

- reduce db_cache_size (cpu_count didn’t seem to work. The only way I got

db_cache_size down was by allocating a lot to the shared pool) -

- *.sga_max_size=554M

*.sga_target=554M

*.shared_pool_size=450M

*.db_cache_size=40M

*.cpu_count=1

*.large_pool_size=50M

- *.sga_max_size=554M

- avoid db file parallel reads

-

- *._db_block_prefetch_limit=0

*._db_block_prefetch_quota=0

*._db_file_noncontig_mblock_read_count=0

- *._db_block_prefetch_limit=0

The goal was to test NFS verses DNFS.

I didn’t expect DNFS to have much of an impact in the basic case. I think

of DNFS as something that adds flexibility like multi pathing and fail

over and takes care of all the mount issues. In some case like on LINUX

where the maximum outstanding RPC is 128, then Oracle DNFS as I

understand it can go beyond these limits. I’m also guessing that in the

case of rac DNFS will avoid the overhead of getattr calls that would be

required with noac that is required with out DNFS. (on single instance

noac can be taken off the mount options)

Setup

DB host Linux version 2.6.18-164.el5 with /etc/sysctl.conf sunrpc.tcp_slot_table_entries = 128 sysctl -p Oracle 11.2.0.2.0

Ran SLOB straight through with default reader.sql which does 5000 loops. This took about

12+ hours to complete 18 tests – 9 on NFS and 9 on DNFS of varying user

loads.

The stats showed dNFS impact to be variable depending on the load.

I then change reader.sql to do 200 loops in order to run more tests faster

and get a handle on the standard deviation between tests.

With that change, then ran the test alternatively between NFS and dNFS, ie I ran

1,2,3,4,8,16,32,64 users loads on NFS then switch to dNFS and ran the

same tests. Then did this 4 times.

The stats were a bit different than the first run.

I then ran the test 8 times (ie full user ramp up on DNFS then NFS , repeat 8 times)

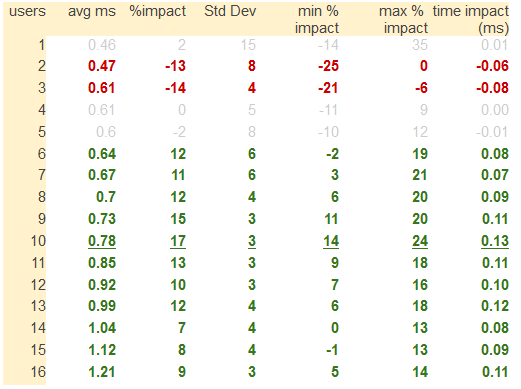

Here are the stats.

Looks like there is a pretty consistent performance degradation on dNFS at 2 users

and a good performance improvement at 8 users.

At 16 users the test is just under line speed (1GbE, so around 105MB/s)

By 32 users the test is hitting line saturation so I grayed out those lines.

The NFS server caches a lot thus the fast I/O times.

% impact = 100 * ( NFS time – dNFS time )/ NFS time , ie % better or worse of dNFS over NFS

| 5000 loops | 200 loops x4 |

200 loops x8 | ||||||

| users | avg ms | % impact | avg ms | % impact | Stdev | avg ms | % impact | Stdev |

| 1 | 0.44 | 0.62 | 0.43 | -3.68 | 10.76 | 0.45 | -7.90 | 9.24 |

| 2 | 0.43 | -16.62 | 0.49 | -19.95 | 15.21 | 0.49 | -20.97 | 3.67 |

| 3 | 0.57 | 6.61 | 0.57 | -23.40 | 13.49 | 0.56 | -8.62 | 5.63 |

| 4 | 0.64 | 5.98 | 0.58 | -0.80 | 4.97 | 0.60 | -0.86 | 5.12 |

| 8 | 0.76 | 17.17 | 0.69 | 14.04 | 11.33 | 0.68 | 11.80 | 2.25 |

| 16 | 1.29 | 11.86 | 1.20 | 8.56 | 15.70 | 1.20 | 8.34 | 2.23 |

| 32 | 2.23 | -2.85 | 2.31 | 1.83 | 16.41 | 2.33 | 2.04 | 2.31 |

| 64 | 4.23 | -8.38 | 4.62 | 1.75 | 12.03 | 4.67 | 2.43 | 0.76 |

| 128 | 8.18 | 0.73 | 1.86 | |||||

At this point there is a clear degradation at 2 and improvement at 8 and the line maxes out just above 16, so decided to run the tests with 1-16 users, 8 times each, and here is the data:

Again, there is apparent degradation around 2 and 3 users and performance improvement peaks around 10 users, with a maximum saved time of 130us.

Here is the script to run the tests

NOTE:you have to be in the directory with “runit.sh” from SLOB

and you have to have backed up libodm11.so as libodm11.so.orig

slob.sh:

#!/bin/bash

# see: http://www.pythian.com/news/33299/my-slob-io-testing-index/

# if EVAL=0, then script only outputs commands without running them

EVAL=1

USERS="1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16"

REPEAT="1 2 3 4 5 6 7 8"

TYPES="DNFS NFS "

function DNFS

{

sqlplus / as sysdba << EOF

shutdown abort

EOF

cp $ORACLE_HOME/lib/libnfsodm11.so $ORACLE_HOME/lib/libodm11.so

sqlplus / as sysdba << EOF

startup

EOF

}

function NFS

{

sqlplus / as sysdba << EOF

shutdown abort

EOF

cp $ORACLE_HOME/lib/libodm11.so.orig $ORACLE_HOME/lib/libodm11.so

sqlplus / as sysdba << EOF

startup

EOF

}

function param {

for i in 1; do

cat << EOF select name,value from v\$parameter where ISDEFAULT='FALSE'; select count(*) from v\$dnfs_servers; select count(*) from v\$dnfs_files; select count(*) from v\$dnfs_channels; show sga exit EOF done > param.sql

}

function runcmd {

echo $cmd

if [ $EVAL -eq 1 ] ; then

eval $cmd

fi

}

param

for type in $TYPES; do

[[ ! -d $type ]] && mkdir $type

done

for runnumber in $REPEAT;do

echo "runumber $runnumber"

for type in $TYPES; do

if [ $EVAL -eq 1 ] ; then

eval $type

fi

echo "type $type, run number $runnumber"

for users in $USERS; do

echo "Users $users"

for output in 1; do

cmd="sqlplus / as sysdba @param"

runcmd $cmd

cmd="./runit.sh 0 $users"

runcmd $cmd

cmd="mv awr.txt ${type}/awr_${users}_${runnumber}.txt"

runcmd $cmd

done > ${type}/${type}_${users}_${runnumber}.out 2>&1

done

done

done

To extract the data after the test you can grep out the sequential read performance in the AWR files that end in “txt”:

$ grep ‘db file sequential read’ *NFS/*txt | grep User

DNFS/awr_1_1.txt:db file sequential read 32,032 14 0 88.1 User I/O

NFS/awr_1_1.txt:db file sequential read 34,633 16 0 88.8 User I/O

DNFS/awr_2_1.txt:db file sequential read 88,826 52 1 95.4 User I/O

NFS/awr_2_1.txt:db file sequential read 92,367 41 0 95.1 User I/O

DNFS/awr_3_1.txt:db file sequential read 141,068 92 1 96.0 User I/O

NFS/awr_3_1.txt:db file sequential read 145,057 81 1 96.2 User I/O

DNFS/awr_4_1.txt:db file sequential read 192,897 111 1 95.2 User I/O

NFS/awr_4_1.txt:db file sequential read 197,535 118 1 96.0 User I/O

DNFS/awr_5_1.txt:db file sequential read 246,322 153 1 95.3 User I/O

NFS/awr_5_1.txt:db file sequential read 249,038 141 1 96.2 User I/O

DNFS/awr_6_1.txt:db file sequential read 297,759 181 1 95.4 User I/O

NFS/awr_6_1.txt:db file sequential read 301,305 199 1 96.5 User I/O

DNFS/awr_7_1.txt:db file sequential read 349,475 216 1 95.5 User I/O

NFS/awr_7_1.txt:db file sequential read 352,788 244 1 96.5 User I/O

DNFS/awr_8_1.txt:db file sequential read 402,262 266 1 95.8 User I/O

NFS/awr_8_1.txt:db file sequential read 405,099 282 1 96.5 User I/O

DNFS/awr_9_1.txt:db file sequential read 453,341 306 1 96.2 User I/O

NFS/awr_9_1.txt:db file sequential read 456,009 345 1 96.7 User I/O

Alternatively calculate the average single block read time in ms:

grep

‘db file sequential read’ *NFS/*txt | grep User | sort -n -k 5 | sed -e

‘s/,//g’ | awk ‘{ printf(“%20s %10.2f %10d %10d\n”, $1, $6/$5*1000, $5,

$6 ) }’

DNFS/awr_1_1.txt:db 0.44 32032 14

NFS/awr_1_1.txt:db 0.46 34633 16

DNFS/awr_2_1.txt:db 0.59 88826 52

NFS/awr_2_1.txt:db 0.44 92367 41

DNFS/awr_3_1.txt:db 0.65 141068 92

NFS/awr_3_1.txt:db 0.56 145057 81

DNFS/awr_4_1.txt:db 0.58 192897 111

NFS/awr_4_1.txt:db 0.60 197535 118

DNFS/awr_5_1.txt:db 0.62 246322 153

NFS/awr_5_1.txt:db 0.57 249038 141

DNFS/awr_6_1.txt:db 0.61 297759 181

NFS/awr_6_1.txt:db 0.66 301305 199

DNFS/awr_7_1.txt:db 0.62 349475 216

NFS/awr_7_1.txt:db 0.69 352788 244

DNFS/awr_8_1.txt:db 0.66 402262 266

NFS/awr_8_1.txt:db 0.70 405099 282

DNFS/awr_9_1.txt:db 0.67 453341 306

NFS/awr_9_1.txt:db 0.76 456009 345

DNFS/awr_10_1.txt:db 0.74 507722 376

NFS/awr_10_1.txt:db 0.85 508800 433

Or to get the averages, max, min and standard deviations,

grep 'db file sequential read' *NFS/*txt | \

grep User | \

# lines look like

# NFS/awr_9_6.txt:db file sequential read 454,048 359 1 97.0 User I/O

# first number is count

# second number is time in seconds

# names are like "NFS/awr_9_6.txt:db file sequential read "

# break 9_6 out as a number 9.6 and sort by those numbers

sed -e 's/_/ /' | \

sed -e 's/_/./' | \

sed -e 's/.txt/ /' | \

sort -n -k 2 | \

sed -e 's/,//g' | \

# lines now look like

# NFS/awr 9.6 db file sequential read 454,048 359 1 97.0 User I/O

# $2 is userload.run_number

# $7 is count

# $8 is time in seconds

# print out test # and avg ms

awk '

{ printf("%10s %10.2f ", $2, $8/$7*1000 ) }

/^NFS/ { printf("\n"); }

' | \

# print out user load

# print out %impact of dns

awk '{printf("%10s %10.2f %10.2f\n",int($1),($4+$2)/2,($4-$2)/$2*100) }

END{printf("%d\n",17) }

' | \

# for each test, each user load

# print avg ms, avg impact, std of impact, min and max impact

awk 'BEGIN{row=1;nrow=0}

{ if ( row != $1 ) {

if ( nrow > 0 ) {

printf("%10s ", row )

row = $1

for(x=1;xmax) {max=val};

if(val< min) {min=val};

}'

1 0.46 1.93 14.69 -14.00 35.00

2 0.47 -13.24 8.14 -25.42 0.00

3 0.61 -13.88 4.14 -21.13 -6.25

4 0.61 0.10 5.40 -11.29 8.62

5 0.60 -1.60 7.94 -9.68 11.67

6 0.64 12.10 6.33 -1.54 19.30

7 0.67 10.73 5.53 3.03 21.31

8 0.70 12.46 4.42 6.06 19.70

9 0.73 14.77 2.94 11.43 19.70

10 0.78 17.08 2.91 13.89 23.61

11 0.85 13.48 2.90 8.64 17.95

12 0.92 10.34 2.76 6.90 16.28

13 0.99 11.83 3.92 6.45 18.28

14 1.04 7.23 4.42 0.00 13.13

15 1.12 8.03 4.18 -0.93 13.21

16 1.21 9.37 2.78 5.08 14.04

Trackbacks

Comments

12c Orion has dNFS support. Checking on 11.2.0.4.

Maybe you should give CALIBRATE a try on dNFS. I’m not set up to try it, but I should think it will use libnfsodm …

I don’t think you can use Orion to test dNFS. Orion needs block/raw devices or files while dNFS is user space IO.

I think potentially it should be possible to make Orion use dNFS IO libraries and based on 11.2 Orion it now has some dependencies on some libraries in Oracle home but I never seen and mention of dNFS and didn’t dig into it deep enough.

“12c Orion has dNFS support”

Aha!

@Alex Gorbachev

Do you doubt that? I just asked the guy that owns Orion FWIW.

@Alex Gorbachev

Orion *does not* require block/raw disk. It works just fine against file system files end even opens them O_DIRECT … FWIW.

I thought that’s what I said about files – “Orion needs block/raw devices or files”.

Regarding 12c – I guess I can easily verify it now. :)

@Alex Gorbachev

Ah, you did say that… and you used the word “while” between block/raw and files so my sematic brain read that as somehow dNFS doesn’t need or use files. No matter.

Your beta 12c might not have it. 12c is really 11rR3 until 12.2 :-)

I just confirmed. 12c will have dNFS Orion but I had to ask if they’d do 11.2.0.4 as well. We’ll see. I’m usre you know who “they” are in this regard (not some beta program mgrs) so you could ask as well.

Orion does not open files NFS using O_DIRECT. Blocks yes

Hey Kevin,

Thanks for stopping by. Ran calibrate a couple of times. Nice stress test BTW. Then ran the two users load a dozen times. Speeds never went down to the NFS 2 user speeds.

SET SERVEROUTPUT ON

DECLARE

lat INTEGER;

iops INTEGER;

mbps INTEGER;

BEGIN

— DBMS_RESOURCE_MANAGER.CALIBRATE_IO (, , iops, mbps, lat);

DBMS_RESOURCE_MANAGER.CALIBRATE_IO (2, 10, iops, mbps, lat);

DBMS_OUTPUT.PUT_LINE (‘max_iops = ‘ || iops);

DBMS_OUTPUT.PUT_LINE (‘latency = ‘ || lat);

dbms_output.put_line(‘max_mbps = ‘ || mbps);

end;

/